State of natural language processing in surgery: Part 1

By: Cynthia Saver, MS, RN

Imagine this: As a nurse in the OR talks with a patient, a computer listens in, recording the nurse’s notes so that data are added automatically to be retrieved later. The surgeon and anesthesiologist also interact with the patient without the burden of manual data entry, and the insurance company has preauthorized the procedure using a computer-generated algorithm. Later, an algorithm ensures the facility receives maximum reimbursement, and another algorithm automatically extracts data for submission to the American College of Surgeons National Surgical Quality Improvement Program (ACS NSQIP) so it can be used to improve patient quality.

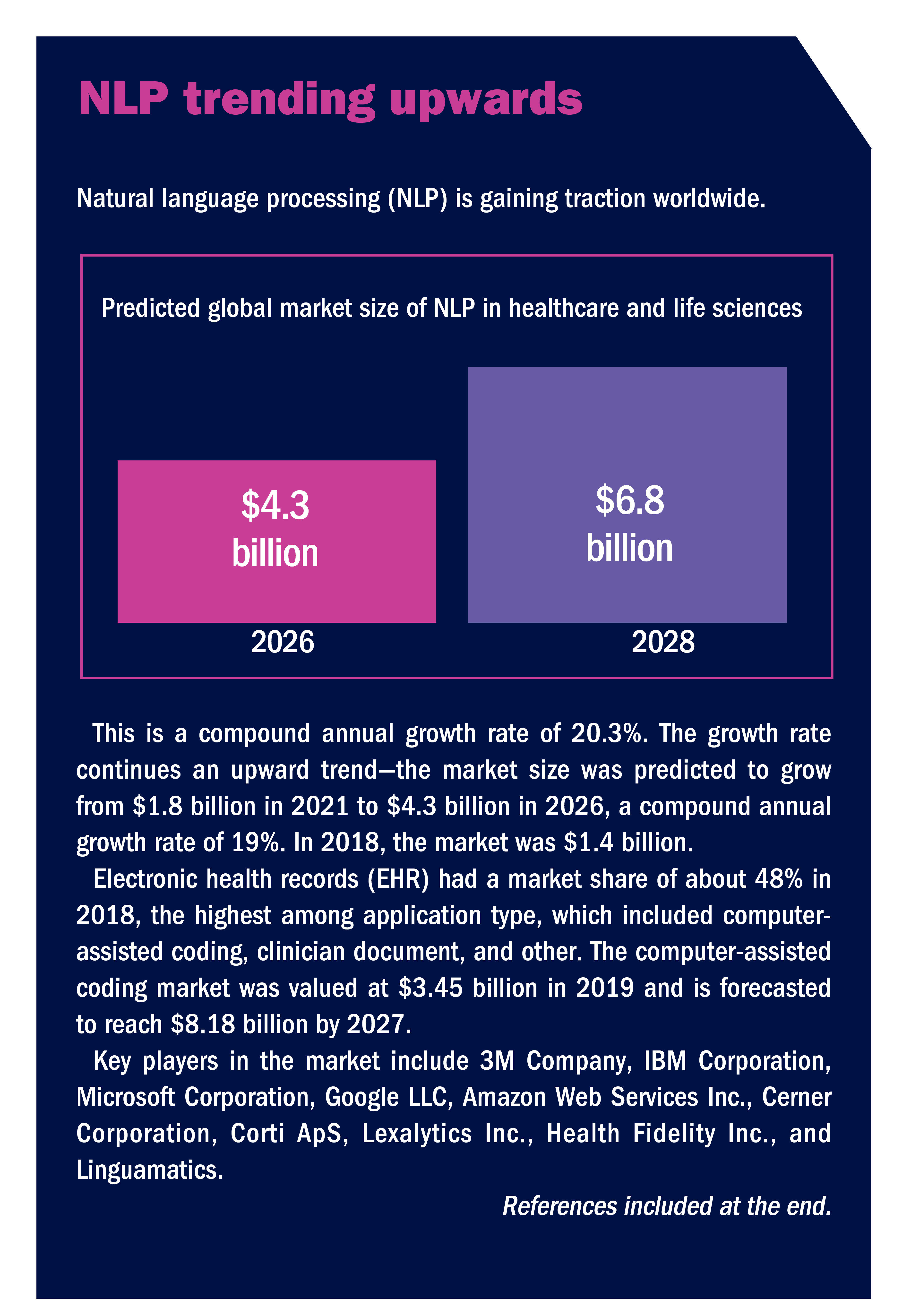

Natural language processing (NLP) may make this scenario commonplace in the future. It is already being used to reduce manual data entry, improve coding, and enhance research efforts. NLP is becoming a global phenomenon, with a predicted compound annual growth rate of 20.3% from 2026 to 2028 (sidebar, NLP trending upwards). “It’s exciting,” says Yaa Kumah-Crystal, MD, MPH, a pediatric endocrinologist who works on improving the usability of electronic health records (EHR) through NLP and is clinical director for health IT in the biomedical informatics department at Vanderbilt University Medical Center in Nashville, Tennessee. “It’s an opportunity for people to leverage technology, where we can interact more seamlessly with our computers, rather than just use a keyboard.”

Yaa Kumah-Crystal, MD, MPH

Yaa Kumah-Crystal, MD, MPH

This two-part series looks at the role of NLP in surgery. Part 1 describes NLP and how it is being used in several areas, including speech recognition and preauthorization. Part 2 explores NLP’s role in outcomes and research, the challenges associated with its wider use, and considerations for purchasing NLP-related products.

What is NLP?

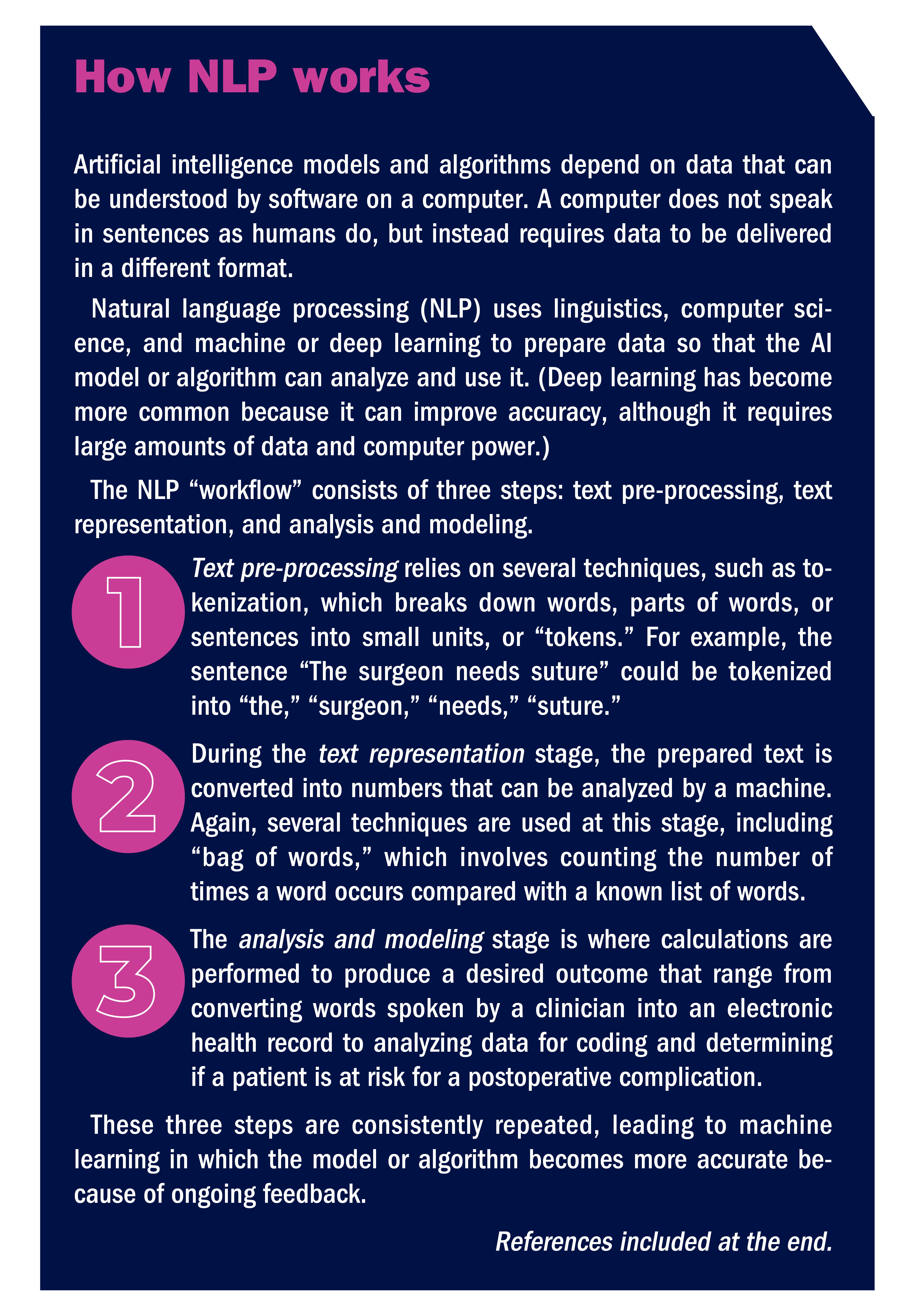

A McKinsey & Company report on NLP defines it as “a specialized branch of artificial intelligence [AI] focused on the interpretation and manipulation of human-generated spoken or written data.” Maxim Topaz, PhD, MA, RN, the Elizabeth Standish Gill associate professor of Nursing at Columbia University Medical Center and Columbia University Data Science Institute in New York, says it refers to “a set of computer algorithms that allow computers to read human-generated text” (sidebar, How NLP works).

Maxim Topaz, PhD, MA, RN

Dimitrios Stefanidis, MD, PhD

NLP has several applications related to surgery including speech recognition, which allows clinicians to dictate notes in real time. But NLP’s true power lies in its ability to extract data that, through AI techniques such as machine learning (ML), computers can use to develop tools such as algorithms. These algorithms can support functions ranging from helping clinicians make better patient care decisions to ensuring accurate coding of surgical procedures.

NLP extracts structured data from sources such as EHR, and it also extracts and converts unstructured data to structured data, so that a computer can “read” it. Structured data have a set format (eg, diagnoses, billing codes, and laboratory results), while unstructured data do not (eg, radiographs and MRI scans). “Structured data are things like vital signs on a flow sheet, and unstructured data are items that are in nurses’ notes and physicians’ progress notes,” says Davera Gabriel, RN, director for terminology management in biomedical informatics and data science at Johns Hopkins University in Baltimore. Most data in an EHR are unstructured, so the ability to harness this information is significant.

Davera Gabriel, RN

Davera Gabriel, RN

“NLP will parse the individual words in a sentence, the order of those words, and how close those words are to other important things,” Gabriel says in describing how unstructured data are processed. “The information is then put into a database where it can be extracted for other uses, such as a clinical decision support algorithm.” For example, an NLP algorithm analyzing free text in the EHR of a patient with heart disease would be able to recognize whether the patient currently has heart disease or has a family history of heart disease so that the information can be put into the correct database.

Initially, much of NLP was “rule-based,” where the inputs were analyzed against a fixed set of rules entered by a programmer. A simple example is the autocorrect feature in Microsoft Word. However, Dr Kumah-Crystal says ML models have advanced the technology and are helping to better put information into context, although more work needs to be done. “The more we are able to base the inference on the context of what you are trying to say, the smarter the tools become,” she says. “And you need to be able to teach [the computer] like you teach a child, but there’s not an easy way to incorporate feedback into these tools.” She notes that building an interface that allows users to give feedback is essential in improving the computer’s function.

As NLP helps improve a computer’s ability to make inferences, the usefulness of information also will improve. “It makes us appreciate how sophisticated our minds are—it’s hard to replicate that,” Dr Kumah-Crystal says.

Here is a closer look at some of the areas where NLP can make a difference in the surgical world.

Speech recognition

Consumers experience speech recognition when they use products such as Siri and Alexa, see closed captioning on TV, or call a help line and receive automated responses. In these cases, “close enough” is an acceptable accuracy goal—for example, if a different song than the one requested is played, that may be annoying but it is not a serious problem. In the clinical setting, however, accuracy is vital from both a patient safety and a business perspective, and that is where NLP comes in.

“Speech recognition has been around for more than 50 years, but it made huge strides lately enabled by [AI],” says J. Marc Overhage, MD, PhD, chief medical informatics lead for Elevance Health, Indiana, and former chief medical information officer for Cerner. Many speech recognition programs incorporate interactive voice response (IVR), which enables a “conversation” to occur without a live person.

Stephen Morgan, MD

Stephen Morgan, MD

Speech recognition is primarily being applied in physician offices or clinic settings. One format is ambient voice technology—the computer “listens” to physician and patient conversations and interprets them by recognizing concepts. “If I’m interviewing a patient through AI, it can discern if it’s the patient or the doctor speaking and create a note out of that conversation,” says Stephen Morgan, MD, senior vice president and chief medical information officer for Carilion Clinic in Roanoke, Virginia. It also knows to put data such as orders or vital signs into designated areas in EHR.

Dr Overhage exemplifies a patient with knee pain to further explain concepts and NLP. In a series of questions and answers between the physician and patient, the patient complains of persistent knee pain for the past 6 months, inability to play volleyball as a result of that pain, and ineffectiveness of taking ibuprofen for the past 3 weeks. NLP identifies the concepts being discussed (knee pain, 6 months, volleyball, ibuprofen, past 3 weeks, and little effect on pain) and connects them. “This process is easy for us but hard for the computer,” says Dr Overhage. The computer must determine whether “3 weeks” goes with how long the patient has had pain, when they took ibuprofen, or how long since they played volleyball. “There are now pretty good commercial products that can do this,” he says.

Topaz says that ambient voice technology can help alert clinicians to areas that need to be addressed. For example, in a 2022 study, Topaz and his colleagues analyzed recordings of conversations between home care nurses and patients, and half of the problems patients reported during the conversations were not documented in the EHR. “That’s a huge area for improvement,” he says. Some problems may not be severe or not fall within the nurse’s purview, but that still leaves areas that should be documented and addressed.

Dr Kumah-Crystal says that Vanderbilt is collaborating with Epic to develop speech recognition for EHR. Clinicians can use their smartphones or desktop computers to ask the EHR a question, such as the patient’s last blood glucose level, and quickly receive the answer. The system is currently being pilot tested, primarily in ambulatory settings, and Dr Kumah-Crystal expects usage to increase next year, when physicians will be able to verbally input orders for admitted inpatients.

It is likely only a matter of time before ambient voice technology is widely available for inpatient and OR settings. This means nurses will be able to speak as they deliver care instead of typing on a computer, and NLP will help them do that, Gabriel says. “When nurses can speak and express themselves in a more fluid, natural way, it makes it easier for them to do their documentation,” she says. “NLP can then turn that data into structured data that creates a rich repository of information that benefits nurses and patients.”

Improved coding

OR revenue depends on accurate coding so that billing completely reflects what was done for the patient. Yet relevant information can easily be lost in unstructured areas of the EHR, such as physicians’ progress notes. NLP can extract relevant information from these notes and assign the correct codes to improve billing accuracy.

At Vanderbilt, a tool is used to scan notes in the EHR to identify conditions that are important to be coded but have not been included in the patient’s problem list. Dr Kumah-Crystal notes that in the future, this type of tool could be used to proactively suggest to clinicians that they add to the list as the patient is being treated. For example, if hyperkalemia was mentioned in the progress notes, the computer could suggest that it is something that needs to be considered. “It would raise awareness of comorbidities that clinicians should be addressing,” Dr Kumah-Crystal says. “Things can be buried in the EHR, and the good thing about computers is that they can evaluate a large amount of data and feed that back.”

Preauthorization

Elevance is working on the use of NLP to address preauthorization for services, including surgery. “This is one of the frontier areas,” Dr Overhage says, referring to a wider trend that moves beyond NLP to natural language understanding (NLU), which incorporates information from a variety of sources. “It helps us meet our responsibility to employers and state and national agencies to not squander resources.”

An example is total knee arthroplasty, where Dr Overhage says the question is, “Have we exhausted all the conservative therapies, so a patient doesn’t have surgery they don’t need?” NLU helps answer that question by marrying structured data with unstructured data from a variety of sources. Structured data might include claims reports showing the patient went to physical therapy and picked up a pain prescription (EHR would only show the provider ordered the medication). Unstructured data from progress notes might show that the patient’s knee pain has not improved and is keeping them from daily activities. That would be converted into structured data, and all the information would be combined by applying techniques such as ML to reach a decision.

One benefit of this is that it helps explain the result to patients, who may be upset if their physician recommended surgery, but the insurance provider did not authorize it. “At the end of the day, I should be able to tell you why we made that decision,” Dr Overhage says. For example, the algorithm that processes data from the NLU process might show that trying a brace first is better because it is low cost and low risk.

Elevance is still developing preauthorization algorithms. Part of the challenge is the need to have a high confidence level that the results are accurate. “I don’t have to be absolutely perfect, but I have to be pretty darn good and know when I am not confident,” Dr Overhage says. When confidence is lower, a provider must verify the information, adding another step.

NLU fosters integrated decision making that can be leveraged in many ways, including precision medicine that tailors feedback based on a patient’s individual situation.

Optimal quality

Registries such as ACS NSQIP are a valuable source of insight for promoting quality and providing the means to conduct research for improving patient care. Yet submitting patient data to the registry is very manual and time-consuming. “A lot of the data is locked in unstructured notes such as operative notes,” says Dr Morgan. “NLP reduces the amount of manual data extraction and analysis of the surgical procedure and outcomes.”

Carilion worked with MetiStream on an NLP solution that extracts data on adenomas detected by endoscopy that lie in operative notes, pathology reports, and radiology reports. This, in combination with structured data such as patient demographics and provider profiles, results in information that endoscopists can access through a dashboard to obtain their adenoma detection rate. A future goal is to use this type of information to identify patients at high risk for colon cancer, Dr Morgan says. Those patients could be prioritized for a colonoscopy.

Carilion and MetiStream are now expanding the program’s scope to make it easier to submit information to ASC NSQIP. The software, called Ember, will automatically extract and analyze data from Carilion’s enterprise data warehouse and enter it into the ASC NSQIP database; both the software and storage are cloud based, so staff can easily log in and the data should be secure. The solution will support any validation that also requires human validation when needed. That will be faster than traditional data entry because Ember will direct the healthcare provider where they need to look in the EHR to obtain the relevant information for that patient. The plan is to launch a beta product in January 2023.

Decision support

Computers can analyze structured and unstructured data (through NLP) to alert clinicians to situations that need attention. Dr Kumah-Crystal notes that computers can easily read structured data such as a temperature, but if someone writes “fever” in notes, the computer needs to be taught that it is something the clinician should be alerted to.

That is achieved through a “concept of learning” system, where the computer is given a large amount of data that includes how patients responded to treatment. “The computer scans lots of data to find common themes that enable it to make suggestions to the clinician,” Dr Kumah-Crystal says.

Based on themes and algorithms developed from data, the computer might be able to alert clinicians to situations like an increased risk for a complication. In fact, the most common use of NLP in research related to surgery is predicting postoperative complications, according to a systematic review and meta-analysis by Mellia and colleagues. “Studies show that, in general, NLP can result in a 15% to 25% improvement in risk identification,” Topaz says.

Although support for use of NLP is evolving, John Fischer, MD, director of clinical research program, division of plastic surgery, associate professor of surgery at the Hospital of the University of Pennsylvania in Philadelphia, and a coauthor on the Mellia study, envisions that future NLP-based algorithms could serve as sentinels in the background of EHR, piecing together information that could flag a complication. For example, an algorithm might detect that a patient has tachycardia, a fever, and an elevated white blood cell count, which— combined with the patient’s recent CT scan showing an abscess in the postoperative site—would alert the clinician to the possibility of sepsis related to a deep organ infection.

Dr Fischer notes that NLP is better than manual chart review for identifying those who truly do not have a complication; in other words, a true negative. However, it is not as effective as a human in identifying that a person definitely has a complication, in other words, a true positive. “A true positive is harder because the NLP is simply a tool, but in manual chart review, you have some element of human judgment,” Dr Fischer says. “No tool or instrument is perfect.” NLP is good at extracting information from large data sets, which is beneficial for quality and research purposes.

NLP has the capacity to benefit patients, organizations, and the nursing profession. “NLP is a key component for clinical decision support and capturing all the things that nurses do,” Gabriel says. Learn more about the role of NLP in surgery in Part 2.

—Cynthia Saver, MS, RN, is president of CLS Development, Inc, Columbia, Maryland, which provides editorial services to healthcare publications.

References

Mellia J A, Basta M N, Toyoda Y, et al. Natural language processing in surgery: A systematic review and meta-analysis. Ann Surg. 2021;273(5):900-908.

Rabindranath G. Natural language processing: A simple explanation. Towards Data Science. 2020.

Reports and Data. ICU/Computer-assisted coding market.

Sharma S. Top 10 NLP use cases in the pharma & healthcare industry. Graneber. 2022.

Song J, Zolnoori M, Scharp D, et al. Do nurses document all discussions of patient problems and nursing interventions in the electronic health record? A pilot study in home healthcare. JAMIA Open. 2022;5(2):ooac034.